Client Writeback

Client Writeback Overview

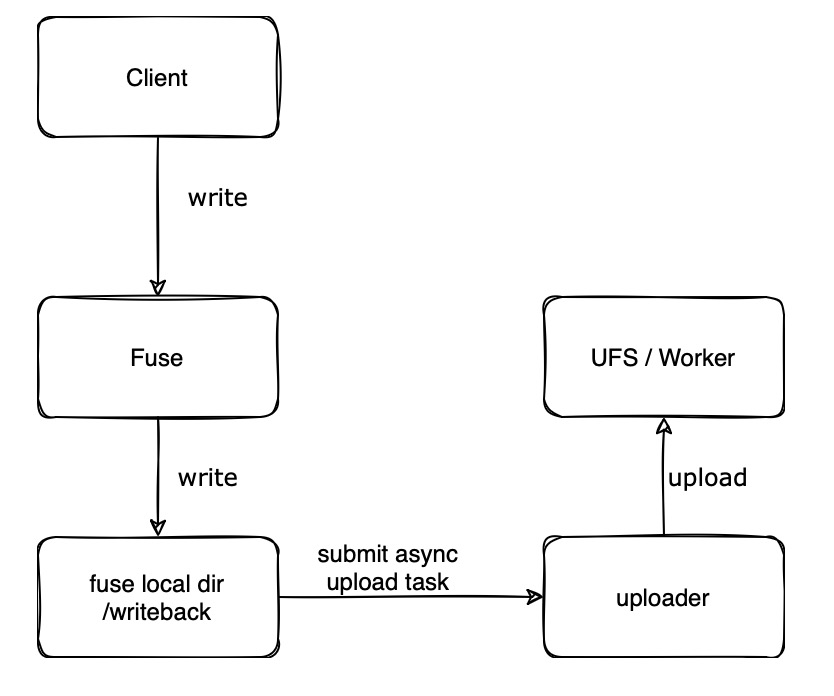

This document describes how to enable the Client Writeback feature in Alluxio. When users write data, the data is first written to the local disk and then asynchronously uploaded to the UFS (Under File System). Currently, the Client Writeback feature is only available in Alluxio FUSE.

The writeback feature saves network overhead and UFS API call costs when writing data through Alluxio, resulting in:

- Increased throughput when sequentially writing large files, reaching speeds close to local disk speeds.

- Improved performance for writing small files, enabling a large number of small files to be written in a short period.

Limitations and Applicable Scenarios

Since writeback uses the local disk to buffer data, its capability is limited by the local disk:

- If the local disk fails during the upload process, data will be lost.

- High-performance SSDs are required for the local disk; ordinary HDDs do not provide sufficient performance to achieve acceleration.

Since writeback uploads files asynchronously, the written file cannot be read until the upload is complete.

Based on the above limitations, we recommend restricting the use of writeback to scenarios that are performance-sensitive but can tolerate a small probability of data loss, such as model checkpoint writing scenarios.

Enabling Client Writeback

Add the following configuration in alluxio-site.properties:

# Enable writeback feature

alluxio.user.write.back.enabled=true

# Set the Alluxio path for which writeback is enabled

alluxio.user.fuse.path.based.config.file.path=${ALLUXIO_HOME}/conf/pathConfig.json

# Local disk quota for writeback, default is 0 meaning no limit on disk usage

alluxio.user.fuse.write.back.dir.quota=1TB

# Set the local directory for writeback, which must be on an SSD disk

alluxio.user.fuse.write.back.dir=/data/alluxio/writeback

The format of the pathConfig.json file is as follows:

[

{

"pathRegex": "/user/ai_user1/checkpoint/.*",

"localWriteBackEnabled": true

},

{

"pathRegex": "/user/ai_user2/checkpoint/.*",

"localWriteBackEnabled": true

}

]

Note that the paths here are Alluxio paths, not the mount paths of Alluxio FUSE.

Writeback will only take effect for the paths configured in pathConfig.json;

write requests for non-configured paths will not take effect.

Writeback will throw an exception when the directory usage exceeds the alluxio.user.fuse.write.back.dir.quota configuration value.

An alternative behavior in this situation is fallback to synchronous writing.

In fallback mode, writeback will prioritize uploading locally written intermediate files to UFS before accepting more write requests;

to the user, write requests will be blocked while in this state.

Once the uploads are completed, subsequent write requests will write directly to the UFS.

To enable this feature, add the following configuration:

alluxio.user.fuse.write.back.degraded.sync.write.on.insufficient.space=true

Accelerating Small File Writes

When users write files through FUSE, FUSE first checks if the file exists. If the file does not exist, each check operation will penetrate to the UFS. This behavior causes poor performance when writing a large number of small files, as much time is spent on UFS API calls. Writeback supports using a bloom filter to quickly determine if a file does not exist without requesting the UFS. To enable this feature, add the following configuration:

alluxio.user.fuse.write.back.status.bloom.filter.enabled=true

The capacity of the bloom filter is 10 million and it refreshes every 5 minutes.

If the false positive rate of the bloom filter, viewable via the WriteBackBloomFilterFpp metric, is found to be high during use,

you can modify the refresh period with this configuration:

alluxio.user.fuse.write.back.status.bloom.filter.refresh.period=1min

By default, writeback optimizes the writing of empty files, synchronizing empty files directly to the UFS. If there is a high demand for accelerating the writing of empty files, this optimization can be disabled:

alluxio.user.fuse.write.back.sync.flush.empty.file=false

Handling Files That Fail Asynchronous Upload

If an upload fails three consecutive times for a writeback operation, the file will be marked as upload-failed and moved to the UPLOAD_FAILED subdirectory under the writeback directory.

Note that writeback does not directly delete upload-failed files to prevent data loss.

If upload-failed files are detected, please handle them promptly.

The number of upload-failed files can be viewed via the UploadManagerUploadFailedFiles metric.

Metrics

| Metric | Description |

|---|---|

UploadManagerBytesWriteToUfs |

Bytes written to UFS by writeback asynchronously |

UploadManagerFailedTask |

Number of failed writeback asynchronous upload tasks |

UploadManagerSuccessTask |

Number of successful writeback asynchronous upload tasks |

UploadManagerRunningTask |

Number of files currently being uploaded by writeback |

UploadManagerSpaceCapacity |

Maximum disk usage for writeback |

UploadManagerSpaceUsed |

Disk space used by writeback |

UploadManagerUploadFailedFiles |

Number of files in the local UPLOAD_FAILED directory for writeback |

WriteBackBloomFilterApproximateElements |

Number of files recorded by the bloom filter |

WriteBackBloomFilterFpp |

Bloom filter false positive rate |

WriteBackBloomFilterFullLoadedDirectories |

Number of directories fully loaded by the bloom filter |

WriteBackBloomFilterLoadingDirectories |

Number of directories currently being loaded by the bloom filter |