Cosbench performance benchmark

COSBench Overview

COSBench is an open-source stress testing tool developed by Intel for object storage.

Alluxio supports a RESTful API that is compatible with the basic operations of the Amazon S3 API. this tool can be used for read-write performance testing.

This document describes how to conduct end-to-end testing of Alluxio using COSBench.

Results Summary

| File size | File count | Concurrency of each driver | Throughput |

|---|---|---|---|

| 1GB | 10000 | 1 |

driver1: 604.03 MB/S driver2: 592.85 MB/S driver3: 597.94 MB/S driver4: 612.99 MB/S total: 2407.81 MB/S |

| 1GB | 10000 | 16 |

driver1: 5.45 GB/S driver2: 5.1 GB/S driver3: 4.69 GB/S driver4: 5.2 GB/S total: 20.44 GB/s |

| 1GB | 10000 | 128 |

driver1: 9.24 GB/S driver2: 8.59 GB/S driver3: 10.06 GB/S driver4: 9.05 GB/S total: 36.94 GB/s |

| 100KB | 10000 | 1 |

driver1: 14.61 MB/S - 146.08 op/s driver2: 11.96 MB/S - 119.55 op/s driver3: 11.75 MB/S - 117.46 op/s driver4: 11.94 MB/S - 119.42 op/s total: 50.26 MB/S - 502.51 op/s |

| 100KB | 10000 | 16 |

driver1: 311.69 MB/S - 3116.87 op/s driver2: 244.72 MB/S - 2447.15 op/s driver3: 286.34 MB/S - 2863.35 op/s driver4: 287.53 MB/S - 2875.26 op/s total: 1130.28 MB/S - 11302.63 op/s |

| 100KB | 10000 | 128 |

driver1: 1.19 GB/S - 11877.99 op/s driver2: 1.17 GB/S - 11660.19 op/s driver3: 1.16 GB/S - 11569.5 op/s driver4: 1.17 GB/S - 11650.17 op/s total: 4.69 GB/S - 46757.85 op/s |

The test results are based on an Alluxio cluster configured as follows, with all server instances available on AWS:

-

COSBench Cluster: 1 COSBench Controller node and 4 COSBench Driver nodes.

-

COSBench Instance: c5n.metal: 72 core + 192GB memory + 100Gb network

-

Alluxio Cluster: 1 Alluxio Coordinator node and 4 Alluxio Worker nodes.

-

Alluxio Worker Instance: i3en.metal: 96 core + 768GB memory + 100Gb network + 8 nvme

-

AWS ELB load balancer: Elastic Load Balancing

Preparing the Test Environment

The tool is recommended to run on CentOS 7.0 and above, as there may be unexpected issues in the Ubuntu environment.

Configuring Alluxio

For other Alluxio-related configurations, refer to the Fio Tests section.

- You can configure one or more Alluxio Workers as a cache cluster.

- Need to configure a load balancer to evenly distribute requests to all Alluxio Worker nodes.

- Ensure that the dataset has been completely loaded into the Alluxio cache from UFS.

Preparing COSBench tool

- Download the COSBench 0.4.2.c4.zip file from the COSBench GitHub website, and decompress it on the server.

- Execute the following command to install the dependency library of COSBench.

- For CentOS, execute the following command to install dependencies:

sudo yum install nmap-ncat curl java-1.8.0-openjdk-devel -y

- For CentOS, execute the following command to install dependencies:

-

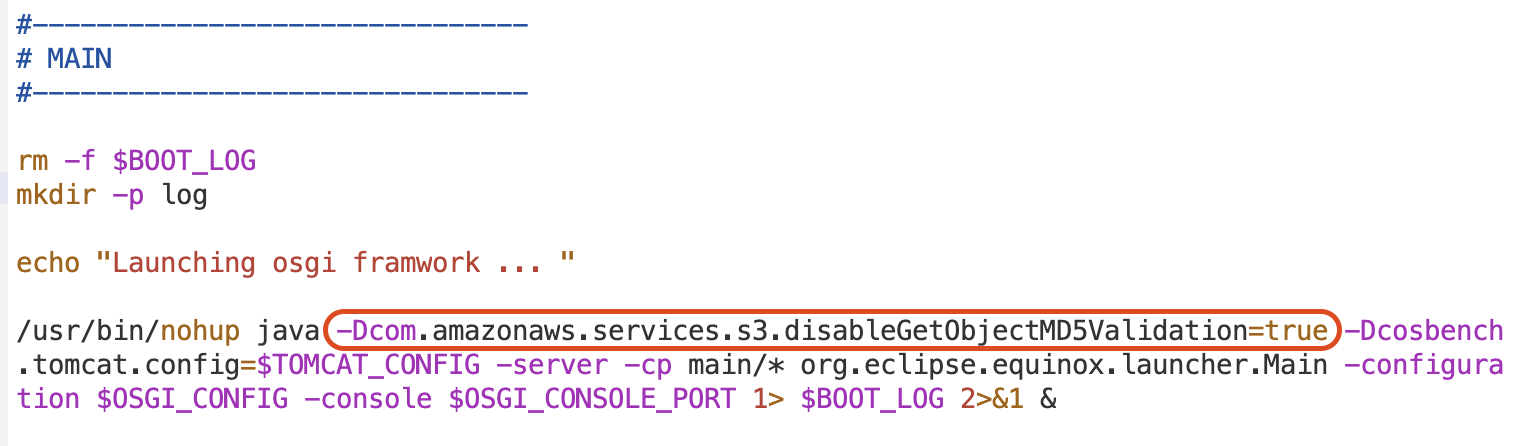

Edit the

cosbench-start.shfile, add the following parameters to the jvm properties, and disable the md5 verification function of s3:-Dcom.amazonaws.services.s3.disableGetObjectMD5Validation=true

- Start COSBench controller and driver:

- For Centos, execute the following command:

sudo bash start-all.sh

- For Centos, execute the following command:

Running the test

- Edit the s3-config-sample.xml file and add task configuration information. Task configuration mainly includes the following five stages:

- init stage: Create the bucket based on the prefix, suffix, and number of containers configured.

- prepare stage: Write the data to the bucket for the subsequent testing, specify threads count, file size and file count according to the configuration.

- main stage: The main stage of testing can specify the running time and the number of threads to perform the corresponding read and write operations.

- cleanup stage: This stage is to clean up the environment, mainly by deleting the data in the bucket to ensure that the data will not be retained in the cluster.

- dispose stage: delete the bucket.

In the test, all stages can be executed or only some stages can be executed according to actual needs.

Note: When using the mount table operation, it can not create a new bucket. You should skip the init and dispose stages and manually create the mount point that meet the bucket name prefix and container naming rules for subsequent testing.

Parameter description:

Parameter Description accesskey、secretkey Currently, the Alluxio S3 API does not support access keys and secret keys.

When supplying an access key to the test tool, put the intended Alluxio ACL username. The secret key is unused, so you may use any dummy value.cprefix Bucket name prefix, such as "examplebucket" containers For the range of bucket name values, the final bucket name consists of cprefix, containers, and csuffix (if this parameter is set), such as "examplebucket1test", "examplebucket2test" csuffix Optional, bucket name suffix, such as "test" oprefix Object name prefix, such as "testobject", "dir/testobject" objects The number and range of objects, such as "r(1,10000)", "r(200,500)" osuffix Object name suffix, such as ".jpg", ".csv" radio The read-write ratio of the current task runtime test running time workers The number of threads for the current task The example configuration is as follows:

<?xml version="1.0" encoding="UTF-8" ?> <workload name="s3-sample" description="sample benchmark for s3"> <storage type="s3" config="accesskey=root;secretkey=dump;endpoint=http://localhost:29998;path_style_access=true" /> <workflow> <workstage name="init"> <work type="init" workers="1" config="cprefix=s3testqwer;containers=r(1,2)" /> </workstage> <workstage name="prepare"> <work type="prepare" workers="1" config="cprefix=s3testqwer;containers=r(1,2);objects=r(1,10);sizes=c(64)KB" /> </workstage> <workstage name="main"> <work name="main" workers="8" runtime="30"> <operation type="read" ratio="80" config="cprefix=s3testqwer;containers=u(1,2);objects=u(1,10)" /> <operation type="write" ratio="20" config="cprefix=s3testqwer;containers=u(1,2);objects=u(11,20);sizes=c(64)KB" /> </work> </workstage> <workstage name="cleanup"> <work type="cleanup" workers="1" config="cprefix=s3testqwer;containers=r(1,2);objects=r(1,20)" /> </workstage> <workstage name="dispose"> <work type="dispose" workers="1" config="cprefix=s3testqwer;containers=r(1,2)" /> </workstage> </workflow> </workload> - Run the task:

bash cli.sh submit conf/s3-config-sample.xml -

Check the task result

Check the task status and result in the endpoint “http://ip:19088/controller/index.html” (Replace IP with the IP of the COSBench controller node):

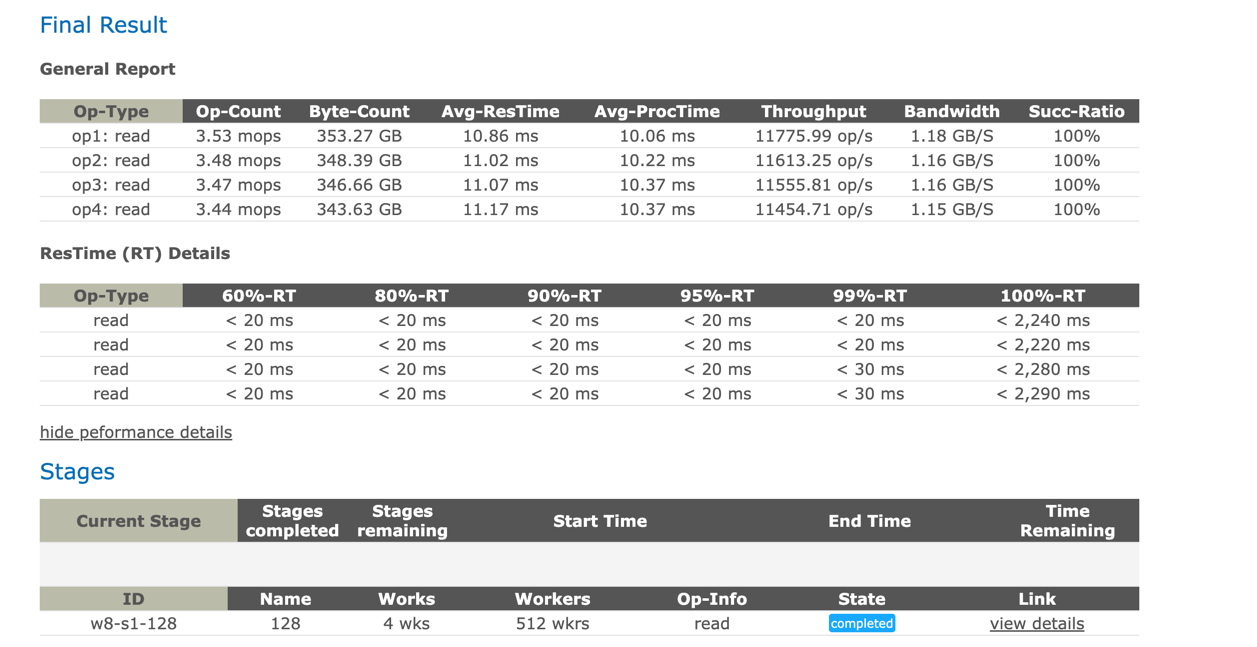

- The following is a read performance test for high concurrency reading of 10k 100KB files, including the following two stages:

- prepare stage: 100 worker threads upload 10k 100KB objects.

- main stage: 4 drivers and 128 worker threads each driver read objects concurrently, running for 300 seconds

<?xml version="1.0" encoding="UTF-8" ?> <workload name="s3-sample" description="sample benchmark for s3"> <storage type="s3" config="accesskey=root;secretkey=dump;endpoint=http://<ip>:29998;path_style_access=true" /> <workflow> <workstage name="prepare"> <work type="prepare" workers="100" config="cprefix=ufs;containers=r(2,2);oprefix=myobjects;osuffix=.jpg;objects=r(1,10000);sizes=c(100)KB" /> </workstage> <workstage name="128"> <work name="read" workers="128" driver="driver1" runtime="300"> <operation type="read" ratio="100" config="cprefix=ufs;containers=r(2,2);oprefix=myobjects;osuffix=.jpg;objects=u(1,10000)" /> </work> <work name="read" workers="128" driver="driver2" runtime="300"> <operation type="read" ratio="100" config="cprefix=ufs;containers=r(2,2);oprefix=myobjects;osuffix=.jpg;objects=u(1,10000)" /> </work> <work name="read" workers="128" driver="driver3" runtime="300"> <operation type="read" ratio="100" config="cprefix=ufs;containers=r(2,2);oprefix=myobjects;osuffix=.jpg;objects=u(1,10000)" /> </work> <work name="read" workers="128" driver="driver4" runtime="300"> <operation type="read" ratio="100" config="cprefix=ufs;containers=r(2,2);oprefix=myobjects;osuffix=.jpg;objects=u(1,10000)" /> </work> </workstage> </workflow> </workload>After conducting performance pressure tests in Stage 1 and Stage 2, the results are as follows:

- Execute the following command to stop the testing service

sudo bash stop-all.sh